MiaBella ANN is an interactive, web-based visualization tool for exploring the inner workings of artificial neural networks.

The program shows a deep learning neural network with up to six layers. You can change the layers, the inputs, and even the number of outputs.

This application performs a gradient descent optimization on whichever neural network configuration you choose. The data set is created randomly at every drawing, and the outputs are generated from random equations based on the inputs.

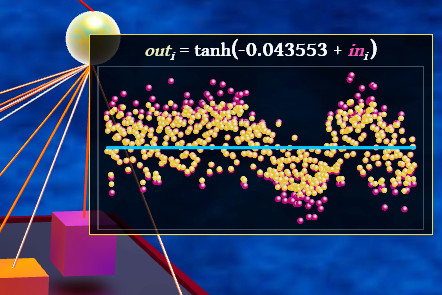

As the optimization proceeds, you can view in real time how the neurons and their connections change to fit the data.

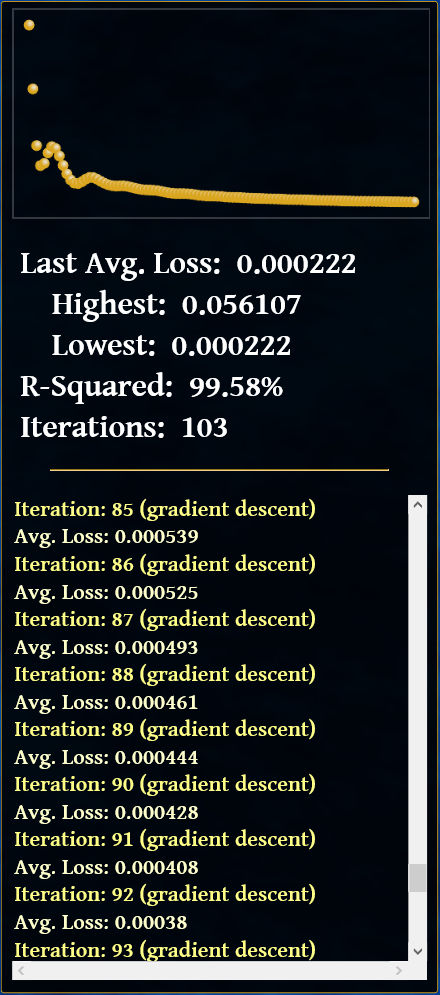

The output panel displays how the cost function progresses through the optimization process.

You can rotate the plot, zoom in, and even select individual elements to see how they develop as the neural network learns.

There are several learning methods to choose from, including RMSprop and Adam.

Other features include Xavier initialization, batch normalization, and numerical gradient checking.

Check out the FAQ section for more details on the creation of this 3D web app.

Frequently Asked Questions

Can I use this on my computer?

This program works on Windows and Mac OS X with the latest versions of Chrome, Firefox®, Safari, Opera, and Microsoft Edge.

What tools were used to create this?

This visualization app was written in TypeScript using BabylonJS as a WebGL™ internet graphics engine.

Does this app do optimizations?

This program uses backpropagation to perform a gradient descent optimization with a modest number of iterations.

Can I check the backpropagation calculations?

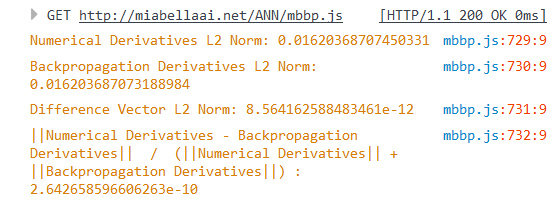

If you press "g" key on your keyboard, this program will run a gradient check on the 100th epoch to compare the numerical and backpropagation gradients.

The results are printed to your browser's JavaScript console.

What does a specific part of the neural network do?

If you hover your mouse pointer over a node, a chart will show the node's input and output. Click your mouse button or press the "x" key to zoom in and out of this chart.

How did you implement batch normalization in the backpropagation?

The derivatives are based on work by Frederik Kratzert and Clément thorey. The batch normalization gradients are included in the gradient check described above.

Where is this program?

The base directory is: miabellaai.net/neural-network-visualization